Introduction

I have been spending my last few weeks sharpening up my Kubernetes skills, one area that I focused on was how to enable and use a Service Mesh in Kubernetes. A service mesh is a layer in your infrastructure that enables control of inbound and outboard traffic. It controls the traffic of any app or service that uses the network.

Kubernetes offers a wide range of Service Meshes, in this blog post I am going to concentrate on HashiCorp’s service mesh offering, Consul, though you may see other refer to it as Consul Connect, Consul Connect is a set of features that were added to Consul was in 2018 to enable service mesh support.

These features consist of three parts, first, the Consul server component, the Consul client, and the sidecars that are deployed. The server component is responsible for persisting data, i.e. configurations, thus requiring high availability, don’t run a single Consul Server in a Production environment. The client component resides in your node, it is responsible for reporting the health of each service running on your node as well as keeping track of the health of other services in other nodes. The client sends this information back to the Consul server component. Another responsibility of the client is to configure and manage all sidecars. The sidecars are responsible for intercepting inbound and outbound network traffic through a proxy, Consul leverages Envoy to achieve this feature.

I have prepared two sample applications to demo how you can configure and use Consul as a Service Mesh in Kubernetes. The first app will be a frontend application written in Blazor, the second app will be a Web API written in .NET 6 using minimal APIs. The Blazor application will call the Web API to get a weather forecast, there will be no data validation, authentication or authorization in the apps since the main focus of this post is to show how to use Consul.

Web API

The Web API is written using the new Minimal APIs feature offered by .NET. The API generates an in-memory collection of WeatherForecast, the entire API can be configured as shown below.

| |

In the code above, the router handler MapGet is used to map incoming HTTP requests whenever the incoming router matches “/weatherforecast”.

API Docker File

To be able to run the API in Kubernetes I will need to containerize the app, the following Docker file should do the trick.

| |

Then to build the container image with Docker execute the following command.

| |

The dot after the build command refers to the current working directory, the -f is the path to the Docker file and the -t command sets a tag, in my case I decided to append version 1.0.0 to my app. After executing the command above, run the following command to confirm the image was created.

| |

Blazor App

Next up, I have created a frontend application base on Blazor, the app uses the default Blazor template, and as part of the template, Blazor adds a weather forecast page. The data displayed is loaded from an in-memory collection. I am going to change the application to load the data from the Web API created above.

This is the WeatherForecastService that comes from the Blazor template.

| |

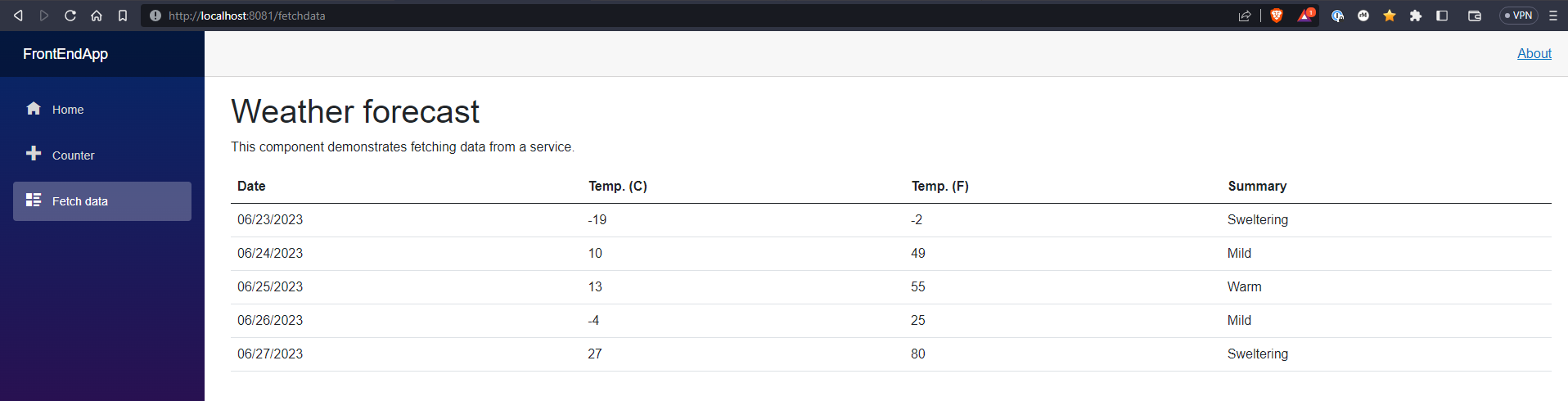

If you were to run the app and navigate to the forecast page you would see something similar to what is being shown on the screenshot below.

I’ll need to refactor the GetForecastAsync method to use an HTTP client to make an API request to the Web API endpoint, https://localhost:7043/weatherforecast, to retrieve the WeatherForecast data from the API

| |

The code above will establish a dependency between the Web API and the Blazor App, the two apps now communicate through the network, once I introduce Consul into the mix, I will show how the network communication between these two apps can be secured by simply installing a Service Mesh.

Blazor Docker File

Just like the Web API, I will need to containerize the Blazor app, the following Docker file should do the trick.

| |

Then to build the container image with Docker execute the following command.

| |

Again, the dot after the build command refers to the current working directory, the -f is the path to the Docker file and the -t command sets a tag, in my case I decided to append version 1.0.0 to my app. After executing the command above, run the following command to confirm the image was created.

| |

Configuring Helm

I plan to use Helm to deploy my applications, Helm is the package manager for Kubernetes, it is a great tool to manage Kubernetes applications, you can use Helm to define, install and upgrade any Kubernetes application.

To use Helm I will need to create a Helm Chart for the frontend and backend application. Executing the following command will create a new helm chart.

| |

Executing the command above will create a new chart titled “backendapp”, the chart is composed of a few files as seen on the folder tree below.

| |

The deploy folder is the root directory for all helm charts. Within that folder, you will find the chart.yaml file, this is where all chart metadata is placed i.e. chart name, the Charts folder is where you place any Charts that your Chart depends on. The template folder is the directory containing the files used to manifest your chart. It utilizes Go’s template language.

The chart needs a few updates, first in the Chart.yaml file, I will set the appVersion to 1.0.0, this is because the Docker image was tagged with 1.0.0 and in my values.yaml I left the tag value empty.

| |

The next change required is to the values.yaml file, I need to change the repository as newly created Helm Charts look for Nginx by default. The name of my container image is backend, so that is the value I will use, see below.

| |

The “pullPolicy” being set to “IfNotPresent” is important as it allows Helm to pull images from the local container registry.

No more changes are required for the backend Helm chart, next, I will create the Helm chart for the frontend using the following command.

| |

Just like the Web API, this will create a chart named “frontendapp under my deploy folder within the FrontendApp and just like the Web API I will need to update the values.yaml and chart.yaml files as shown below.

| |

| |

I’ll need to make an additional update to the Helm chart by changing the port forward from port 8080, the two apps could potentially run on the same node and run into a port collision, therefore, I will change the UI to port forward on 8081, you can do it manually on the terminal using the kubectl port forward but I am going to go ahead and modify the command on the notes.txt file.

Deploying App to Kubernetes

Time to use Helm to deploy both apps. First I am going to deploy the backend app using the following command.

| |

The helm install command takes the name for the install, the chart, this can be a path and a set of flags, in the command above I am not providing any flags, just the name and the path to the chart, which is the current directory.

The output of that command should be the values found in notes.txt, as shown below.

| |

Run each of the following commands to being port forwarding from port 8080 on your machine to port 80 on the container.

| |

Great, I should be able to send the following curl command and get a successful response from the API.

| |

The command above yields the following response from the API.

| |

Excellent, the API was deployed successfully and it is listening to the incoming requests, I don’t need to keep the port-forward proxy open so I’ll use CTRL+C to end the proxy.

Time to deploy the frontend app.

Just like before, execute the Helm install command.

| |

The command should yield something similar to the following.

| |

Execute each of the commands from the notes to get the port-forwarding proxy going.

| |

Great, let’s test it out, I should be able to open my browser to http://localhost:8081, then navigate to the Fetch Data page and see the randomly generate weather forecast create by the API.

As expected, it works, the Blazor App is running on Kubernetes and it is able to communicate with the Web API.

Now that we have the apps deployed and exchanging dates I’ll introduce Consul and configure it to control how the app communicate with each other.

Note: Not shown here, but the frontend required an additional update, the URL change from https://localhost:7043/weatherforecast to http://backendapp:80/weatherforecast that is because the frontend Pod talks to the backend pod though a Kubernetes Service name “backendapp”. This service was generated when the app was installed with Helm, see the service.yaml file under the templates folder.

Install Consul

You can install Consul using the Consul K8s CLI, the CLI makes it easy to get up and running, another option is to install Consul using Helm. That is the option that I will show here.

To install Consul using Helm you will need to add the HashiCorp Helm repository by running on the following command.

| |

You should see the following as a confirmation.

| |

With the HashiCorp Helm repo installed you can use the following command to install Consul, just don’t execute it yet.

| |

The command above will install Consul but it does so with the default configurations, you can provide your own configurations by creating your own values.yaml. In a text editor, create a values.yaml file and add the following content to it.

| |

You can see the default values on the Consul chart by inspecting the Consul chart using the following command.

| |

Or you can visit the official Helm Chart Reference docs to see all the values that can be overwritten on your values.yaml file.

Now you can install Consul by executing the following command.

| |

Successful execution of the command above yields the following.

| |

NOTE The version installed in this example is Consul 1.15.3 which is the most recent version of Consul as of June 2023.

Now run the following command to see the status of the newly created resources.

| |

| |

The deployment consul-connect-injector is responsible for injecting services mesh sidecars as well as keeping the Kubernetes probes in sync with Consul. The consul-webhook-cert-manager deployment is responsible for creating certificates. The prometheus-server deployment runs Prometheus, see Prometheus for more details, and the stateful set consul-server manages persistence claim volumes for the Consul server, remember this should be highly available, losing the claim volume could result in total loss of data.

Note that these resources could take up to a minute to be created, though normally you should expect them to be created within a couple of seconds.

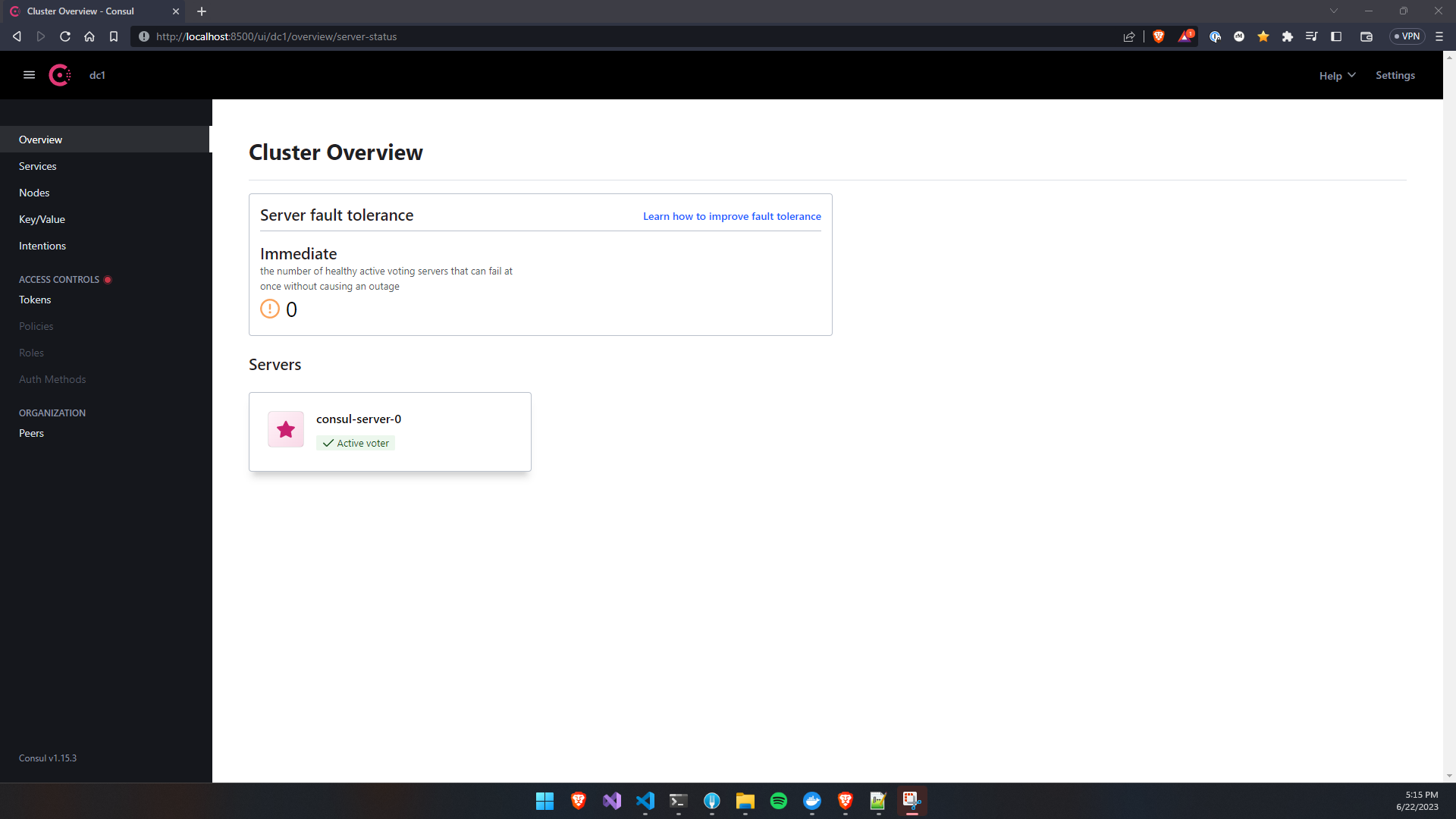

An additional confirmation to Consul being successfully installed is to connect to the UI using a port-forwarding proxy, you can do so by executing the following command.

| |

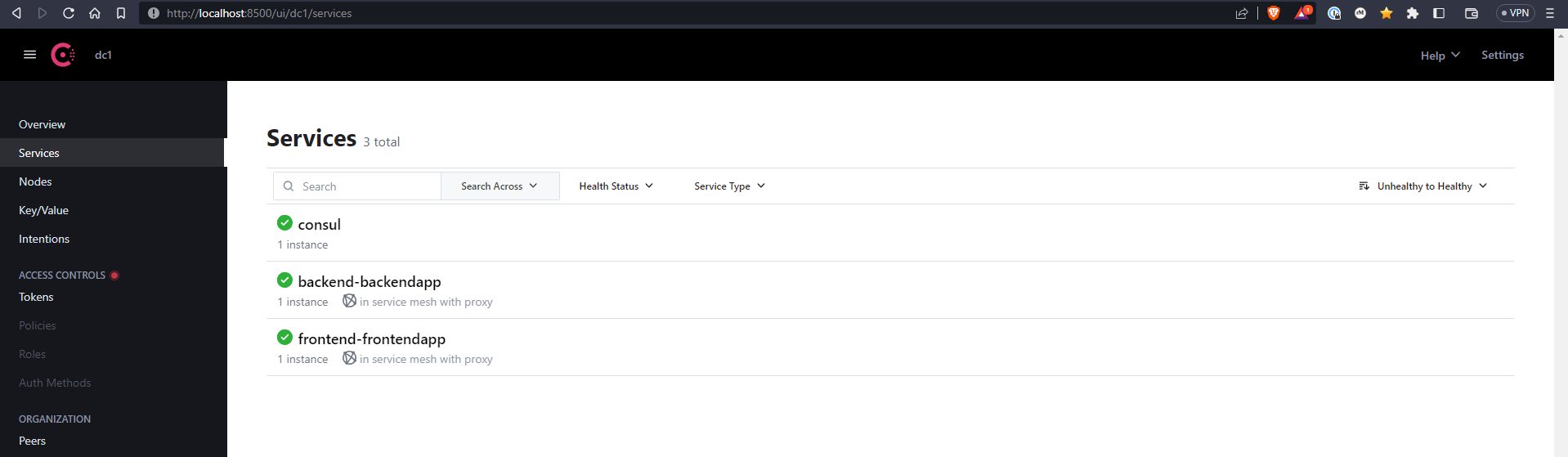

Then on a web browser navigate to http://localhost:8500/ui/, you should see the Consul UI as shown in the screenshot below.

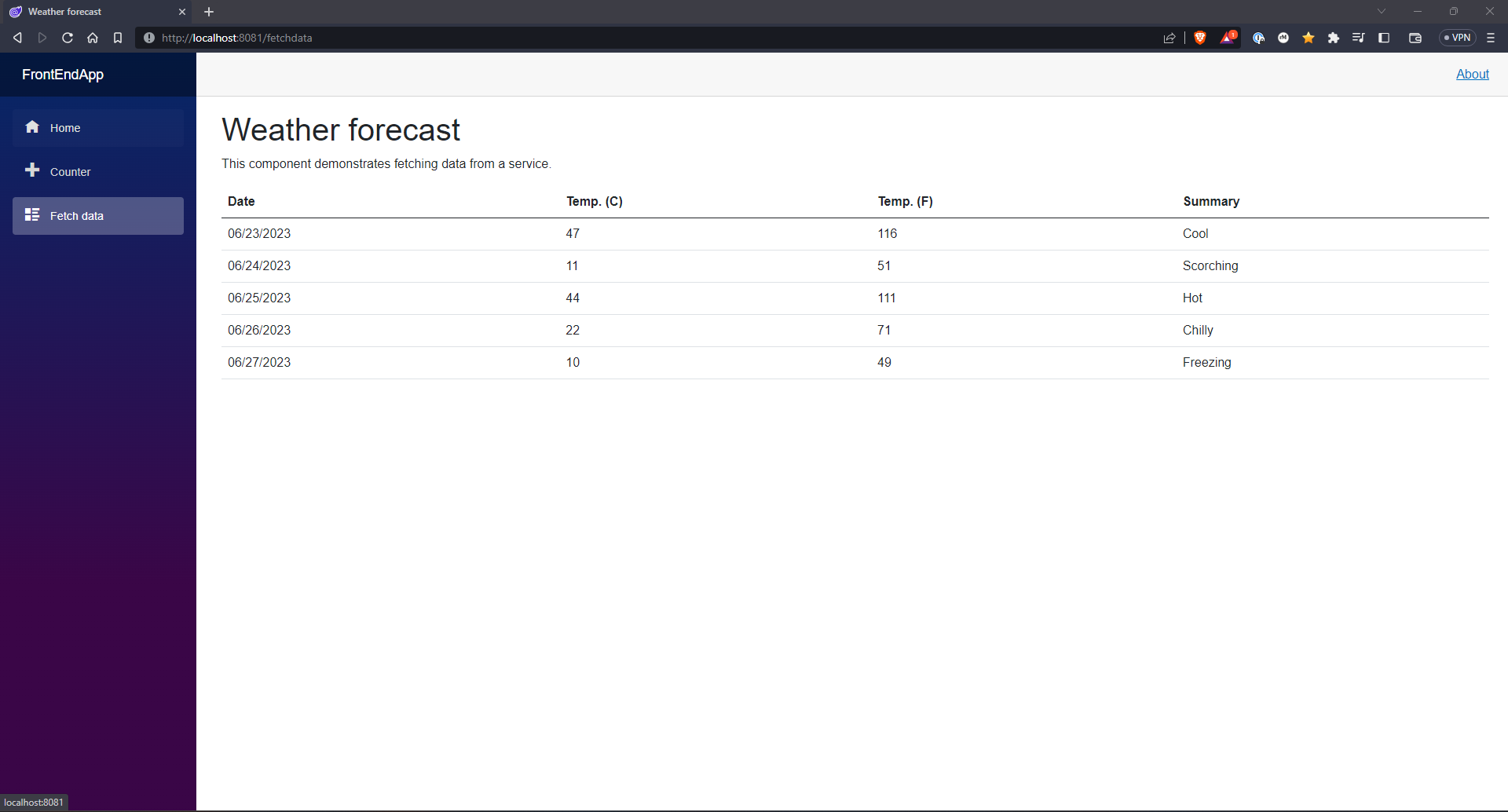

Next, let me confirm that the Blazor app is still up and running.

And it is, great, while Consul has been installed successfully it is still not handling the network communication between the two pods. In order for Consul to handle the network communication between pods, the pods need to be added to the service mesh via pod annotation, see Annotations for more details. In Consul, the annotation required is consul.hashicorp.com/connect-inject:“true”.

Adding Services to the Mesh

The annotation, consul.hashicorp.com/connect-inject:“true”, needs to be added to each pod running under the frontend app deployment, to add the annotation I will need to modify the values.yaml file from

| |

to the following.

| |

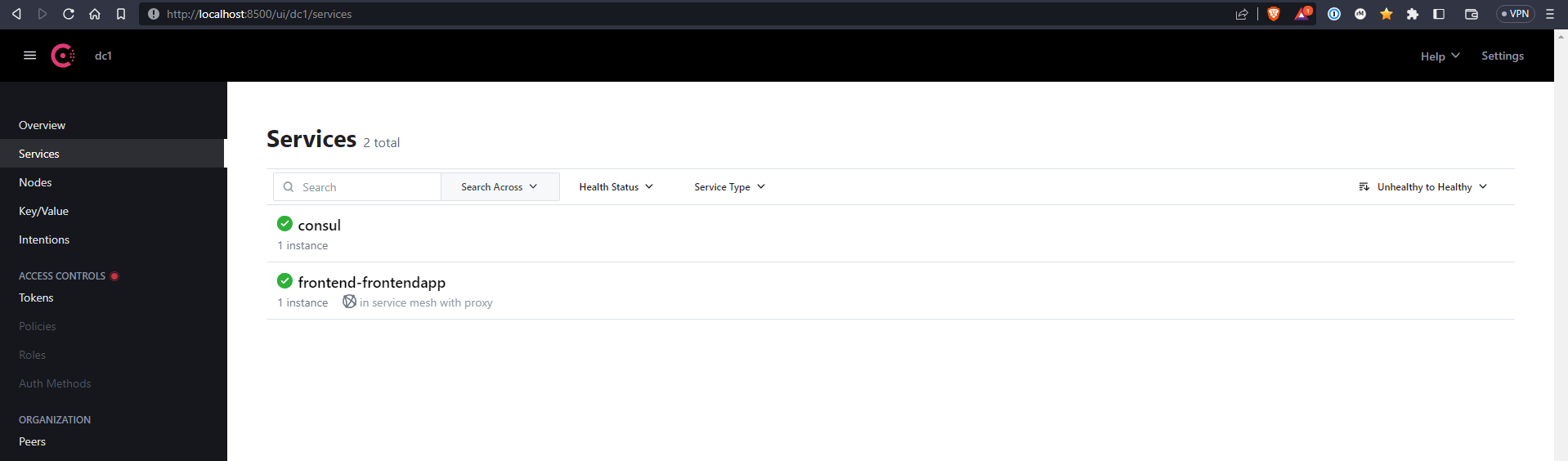

With the annotation now added to the deployment.yaml file, the app can be redeployed. After redeploying the app, and returning to the Consul UI, the frontend app should now be registered under Services as shown in the screenshot below.

Consul uses a mutating webhook, that is, an HTTP callback that allows third-party applications to modify Kubernetes Resources. When the frontend pod was scheduled, Kubernetes called Consul’s mutating webhook, which allows Consul to look at the pod definition to see if it has the consul.hashicorp.com/connect-inject:“true” annotation, if it does, Consul modifies the pod to add a sidecar proxy.

Time to add the “backendapp” to the mesh, as before, I will update the values.yaml file to include the required annotation on any backend pod.

| |

Redeploying the backend app and visiting the Consul UI shows the app was successfully registered, see the screenshot below.

This means both apps are now secure by the Consul Service Mesh since Consul is secure by default, this also means that no traffic outside of the mesh may reach the apps, not very useful, but I’ll change that in part 2 of this blog series.

First, let’s prove that the apps are secure by Consul and that only authenticated and authorized traffic is allowed to reach the applications. In my current cluster, aside from the frontend and backend app I have a few other pods, one of them being the consul server itself, if I have configure everything correctly, then the consul server pod should not be able to communicate with the frontend app, this can be proven by executing the following command.

| |

I get this as a response.

| |

This means the frontend app is secure, containers that are not in the Service Mesh cannot talk to applications that are within the mesh, essentially the Service Mesh rejectes any unauthorized traffic, I can further prove that by removing the Consul annotation from the frontendapp, then redeploying the frontend app, and executing the same command, though this time I get a different response.

| |

The response is the HTML of the main landing page of the Blazor application, which means that the consul-server pod is now allowed to communicate with the frontend app, due to the removal of the frontend app from the Service Mesh. This is great, but we need to allow traffic from outside the mesh to reach the apps that live within the Service Mesh, we also need to control how apps communicate with each other inside the service. Allowing external traffic into the Service Mesh in a secure fashion will be the responsibility of the Ingress gateways, which I’ll cover in part 2 of this series along with how to establish strong ACLS within the Service Mesh.

Conclusion

Using Consul as a Service Mesh in Kubernetes turned out to be easier than expected, the documentation provided by HashiCorp was super useful and pointed me in the right direction whenever I felt lost. I did encounter a weird behavior with the Web API, the liveness probe was pointing to /swagger, just like the readiness probe, and while the readiness probe was succeeding, the liveness probe was failing, so I had to take off the liveness probe from the Helm chart, I’m not sure what the issue was, once I discover the problem I will share it here.

Part two of this series will be on the Consul Ingress Gateway, after that, I plan to move to Istio and Linkerd, two other popular Service Mesh tools in Kubernetes.

Till then, cheerio.